Quite a while ago, a long-time customer called me and asked if I could help them with a WooCommerce webshop. Now, I had some experience with WordPress, but it wasn't much, and I had no WooCommerce experience except for installing it. However, I do have extensive experience with e-commerce and writing high-quality software.

The webshop wasn't stable at all. Updates were lagging behind, and with each (plugin) update, the site would go offline for a few minutes. Next, there was no version control or deployment procedure, making maintenance hard for me. Out of scope for this article, but the site was running on a VPS with some other sites, causing it to bring it down due to heavy load.

Getting this site to deploy updates automatically took a few steps. Let's go over them.

Version control

The first step was to bring the site under version control using GIT. Even though not recommended, I included all of WordPress and all Plugins. This means that with every update, I have to commit all code of all plugins, causing a lot of noise in GIT. But you have to start somewhere.

Deployer

The next step was to implement Deployer. Deployer is a tool to deploy your website without downtime. Setting this up is always a hassle, as you most likely will pull the site down while installing it. I would rather not do this to existing sites, but it makes deployments less stressful.

The goal here was to make deployments boring. Putting a new version online without surprises. Boring is good.

GitHub Actions (for deployments)

To make deployments even more boring, I added GitHub Actions. That means whenever an update is done to the master branch, the site is deployed through the GitHub platform. Making deployments even more boring.

Also: The site has a staging environment. So when a push to the staging branch is done, the site is deployed to the staging environment.

Hey, I'm running a developer focussed newsletter called MageDispatch.com, which is for the community and by the community. Here you can share links that you think that the community should know about. If you like this kind of technical content you will like this newsletter too.

Composer

Now we are coming to the gritty part. I didn't want to composerize all plugin all at once. That would take a lot of time and big risks that things would break. So, I took the slow route.

Whenever WordPress reported an update for a plugin, I tried to move the plugin to Composer. This does not work for every plugin, as some are paid and behind a login without Composer support. But for all free plugins that are listed on WordPress.org, a Composer version is available thanks to WPackagist.org.

This process took a few months, but eventually, more and more plugins got removed from GIT and moved to only a few lines in the composer.json and composer.lock files. However, up until now, some plugins are still hosted in the GIT repository. I really want to move those away from there, and with the help of probably Satis or GitHub packages this probably is possible, but something for in the future.

Dependabot

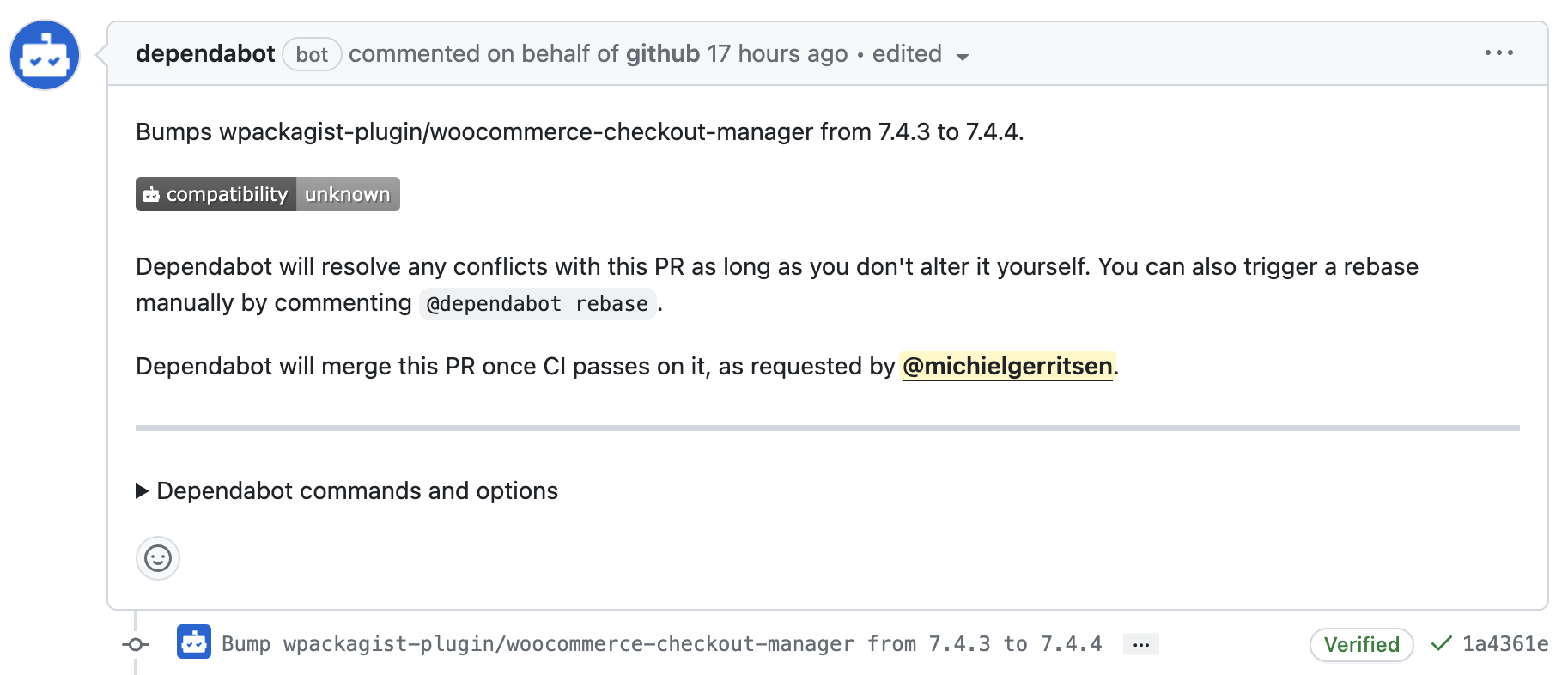

Now we are really getting into automating stuff. Having everything in Composer is great, but you still need to update the stuff every now and then. Luckily for us, we can automate this process. GitHub offers a very slick Dependabot integration.

For those who don't know: Dependabot manages your dependencies. It opens your composer.json and composer.lock (but also package.json, yarn.lock and other files of dependency managers), and analyses which dependencies your project has. It checks the exact installed version, and it checks which versions are available. If there is an update available, it creates a pull request for you to update that dependency.

You now only have to check the pull request, and if approved, merge it. Dependabot has some more options, like rebasing the PR when the main branch changes, ignore versions, and more.

Don't use GitHub but still want something similar? Luckily there is also Renovate, which allows you to achieve the same effect on GitLab of BitBucket.

Combining PRs

This is a little side step, as it's not needed in the end-result. But I've used a GitHub Actions script to combine all open pull requests into a single one. That makes managing and testing everything way easier. I takes all open pull requests and tries to combine them, and open a new one for that. When you then merge this big PR, Dependabot will close all other ones. You can find the script here:

https://github.com/hrvey/combine-prs-workflow

Cypress

We are getting pretty far by now. Updates are rolling in, but how are we sure that those updates aren't breaking anything? We don't, and that's where Cypress comes in. Cypress allows you to write and run end-2-end tests. That means that it allows you to automate a browser to do actions for you. What those actions are is up to you. In my this case, there are a few test. Some are specific for this webshop, others are a more generic, like adding a product to the cart.

Writing is not super hard, but how do you tie this in your project? Let's see.

GitHub Actions (again!)

Haven't we already done something with GitHub Actions? You are correct, deployment happens through GitHub Actions. But we can add another step to that.

This step is probably the most complex step of all. In the previous step, we wrote end-2-end tests, but in this step, we need to execute those for each pull request. So that each pull request is tested in isolation and we are sure that the pull request doesn't bring down the site.

The hard part is that we need to set up WordPress so I can run like it normally runs. That means we need an HTTP server and a database server. And that database needs to be filled with data.

Tying it all together

Adding yet another GitHub Actions title in here is so boring, but that's what we need (again). I'm using the ahmadnassri/action-dependabot-auto-merge GitHub Action to merge pull requests automatically.

The action that is does is quite easy: When the pull request updates within it's bounds (only minors, not majors. This depends on your configuration), add a comment to the pull request:

@dependabot merge

And that's it. Dependabot merges the pull request for you.

Overview

So here is a quick overview to recap all steps:

Dependabot checks if there are updates, and creates a pull request for each one.

The auto merge action verifies if this should be automatically merged and adds a comment if so.

GitHub Actions tests the pull request using Cypress.

When everything is alright, Dependabot merges the pull request.

GitHub Actions first tests the merged commit and, if successful, deploys the latest version.

Final thoughts

It took me quite some time to get to this point. I've been working on this project for about 3 years, so you can imagine how much effort went into it. Currently, about ~700 closed pull requests have been merged manually, so I got a lot of faith in the whole process before enabling the automatic merge. It wasn't an overnight decision.

You still might have some questions, so let's try to answer a few of those:

Is this really fully automatic?

Yes, there is no human interaction required. Human interaction is required only for major updates and when the test fails. Whenever a test fails, I tend to wait a few days to see if there comes another update that might fix the first error. If that doesn't happen, I check it manually.

Next to that, now and then, a test fails due to external reasons: Composer might be down, Docker hub can't be reached, etc. In those cases, I have to restart the test but the process kicks in after that.

How much is actually tested?

Not super much. The checkout is still a grey area from a test perspective. But that can be extremely hard to test, as you might need external access for payments (which is hard), and shipping options might be time-sensitive, which can be hard to test.

However, the process around the cart is being tested extensively. Can a customer add a product to their cart? Can they remove it? Can they add a variation (configurable) product? Can they change the amount, etc.

How long does the testing and deployment process take?

The speed of GitHub Actions varies a bit, and the same goes for fetching external dependencies like Composer and Docker dependencies, but only running the tests takes ~5-6 minutes. Deploying adds another minute.

So, it takes about 15 minutes from pull request to deployment (5-6 minutes to test the pull request, Dependabot merges the PR, and then testing + deploying the main branch).

Does the customer or their customers notice anything of this?

Deployer is a zero downtime tool. This means that it prepares everything in the background, and when it's ready, it switches the release. So no. My customer might only notice that the available updates count stays low.

How do you handle updates for paid plugins that are behind a login?

Unfortunately, this still has to be done manually. Luckily for me, that is only a handful of plugins. I look into these only once a month unless WPScan finds a security issue in one of them.

What are the costs for all this automation?

That's hard to say. I have quite a few projects on my GitHub account with some actions for testing and deployment, including this blog you are currently reading. So, I have no isolated cost overview, but I think it would stay in the free 1000 minutes per month if you don't have any other projects running.

The database fixture is hosted in the client's S3 bucket, so I don't know what the cost of that is. But knowing S3, it won't be much—a few bucks a month max.

Does this also work with (Magento/Laravel/insert platform)?

Yes. The technique is the same regardless of the platform used. In this case I had to move a lot of plugins to Composer, in the case of Magento and Laravel that's the default so you skip that step there.

Do you have questions or suggestions?

Thanks for reading this far. If you have any questions or comments, feel free to respond.